‘Firehose’ of information confronts legislators studying internet use by children and AI

- Oops!Something went wrong.Please try again later.

The South Dakota State Capitol, pictured on Nov. 2, 2022. (John Hult/South Dakota Searchlight)

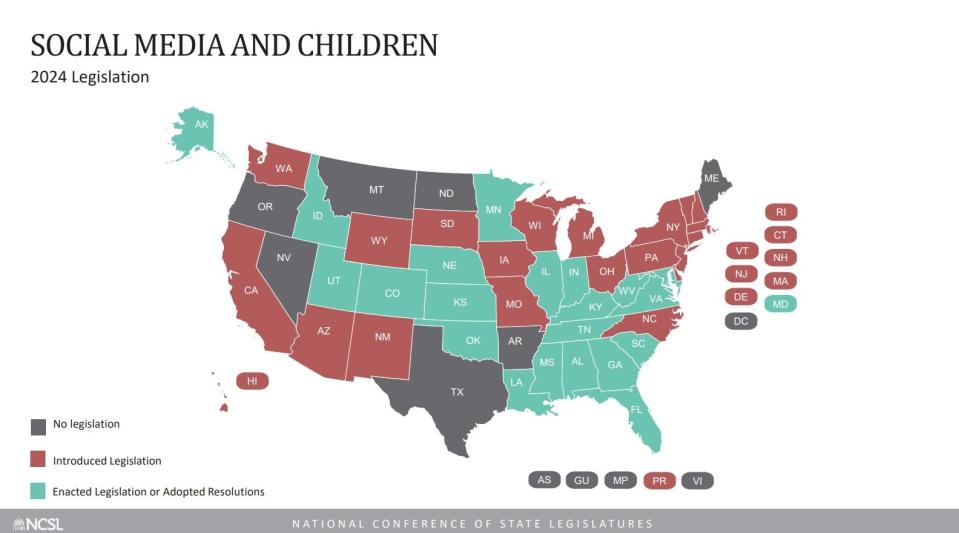

Hundreds of bills about artificial intelligence and internet use by minors have been filed in statehouses in recent years.

Even Heather Morton, who tracks state legislation as an analyst for the nonpartisan National Conference of State Legislatures, has a hard time keeping up.

“This probably feels a little bit like a firehose,” Morton told South Dakota lawmakers.

“Yes, it does,” said Rep. Mike Weisgram of Fort Pierre.

Weisgram is co-chairing a summer study committee, which met for the first time Tuesday in Pierre. The Legislature opted to appoint the committee after rejecting proposed regulations on AI and internet use by minors during last winter’s legislative session.

Nearly all states studying AI, social media

More than 300 bills or resolutions have appeared on AI so far this year, Morton said.

There are proposals to force the disclosure of AI use, to require state agencies to develop AI policies, to ensure the provenance of the data used to train AI systems and to give patients the right to consent to the use of AI by their doctors.

There have even been proposals on “whether or not an AI system can or could be considered or granted status as a person,” Morton said.

The creation of study groups represented the single largest category of enacted AI laws, she said. That’s what South Dakota did after rejecting a proposal from Sen. Liz Larson, D-Sioux Falls, that would have required disclosure of AI use in electoral communications, and another from Sen. David Johnson, R-Rapid City, to criminalize AI-generated “deepfakes” that put real people into sexually explicit photos or videos without their consent.

Lawmakers in Pierre did pass a law to criminalize AI-generated child pornography, which had the support of Attorney General Marty Jackley.

On the social media side, Arkansas and Ohio passed laws requiring kids to verify their age to use social media platforms. Those bills are on hold due to lawsuits filed on free speech grounds, Morton said. TikTok, meanwhile, challenged a Montana law banning that app within that state’s borders.

“There is a preliminary injunction stopping Montana from enforcing that law,” Morton said.

With internet pornography, Morton pointed to states like Texas, which passed a bill requiring age verification to access websites where a third or more of the content is adult-oriented. That particular law has survived an attempt to block it, but the adult entertainment industry’s Free Speech Coalition has appealed in hopes of bringing it to the U.S. Supreme Court.

AI: Not new, but rapidly growing

José-Marie Griffiths, president of Dakota State University, said AI has been around in some form since the mid-1950s. There are four basic types:

Sequential algorithms, which are like a “recipe” of instructions used by systems to do a job, like alphabetizing names.

Pattern recognition, which can be used to find anomalies in datasets, making it possible for banks to send you an alert if your credit card is used in a foreign country.

Classification, which could involve using systems to differentiate between images, initially with the aid of a human.

Statistical prediction, which uses probabilities to determine what might come next.

As useful as AI can be, Griffiths said, the inevitability of its use by bad actors will require humans – and other AI systems – to combat new threats.

“Data poisoning,” or inserting incorrect data into an algorithm to disrupt or do damage, is of particular concern. DSU is studying data poisoning on roadways, where someone could disrupt traffic signals by feeding bad data into the systems controlling them.

“But you could do a lot more damage by going into the GPS system and just tweaking it a couple of degrees, literal degrees, and you’d have chaos everywhere, because everybody would be just off enough,” Griffiths said. “And that can be done.”

Educators toe into AI without state regulation

Joe Graves, secretary of the Department of Education, said the state hasn’t moved to regulate AI in schools.

“Frankly, DOE is not aware of any districts struggling or seeking direction on what to do or what not to do with artificial intelligence in the classroom,” Graves said. “So right now, we’re taking a fairly hands off approach.”

The Associated School Boards of South Dakota has adopted a model AI policy, he said. It defines AI, prohibits its use by students unless directed to use it by their teachers, and says it’s OK for teachers to use it in the creation and refinement of lesson plans but cautions against “overuse” for that purpose.

Last fall, the school boards, school administrators and state education department partnered with DSU for a four-session AI training with David De Jong, dean of the College of Education for DSU.

The idea was to get a sense of how teachers might use AI, and to teach them how to ask the right questions of systems like ChatGPT and use the answers to refine their lesson planning.

Graves told the committee he wasn’t asked about social media or adult websites by the group’s leaders and was therefore unprepared for that part of the discussion. But when asked about smartphones, Graves said, he’s become personally convinced that they’ve had a deleterious effect on students – in and outside the classroom.

“I’m not sure that a smartphone should be in the hands of a teenager, period,” Graves said.

Tech representative: Don’t go too far

David Edmonson of the tech industry trade group TechNet said states should first build data privacy laws. Only then should they target specific uses of AI, and they should only create new agencies or regulations when existing ones are shown to be ill-equipped to handle AI-related problems.

To do otherwise could stifle innovation and slow the adoption of valuable AI technologies that create efficiencies or save lives, he said.

He pointed to a California proposal that would require a watermark on AI-generated content. The ubiquity of digital tools with some AI elements, Edmonson said, would mean that “basically every single advertising image that would exist in print, online or on TV, would have to have a disclosure stating that that AI was used.”

With social media age verification laws, Edmonson’s message was blunt: Don’t pass them.

The trouble, he said, is that a law requiring proof of age sweeps up adults and forces them to hand off personal information to private companies to engage in speech protected by the First Amendment.

Yet Edmonson’s group supports age verification for pornography site access, as long as there are clear markers, such as the Texas standard that requires verification for sites where a third or more of the content is adult-oriented.

That struck Rep. Tony Venhuizen, R-Sioux Falls, as illogical.

“If we can do age verification for pornography, I don’t know why we couldn’t do that for social media,” Venhuizen said.

Rep. Bethany Soye, R-Sioux Falls, is also a member of the group. She introduced a bill for age verification on adult websites during the 2024 session. It passed the House of Representatives but failed in the Senate.

Soye asked Edmonson about suggestions that minors should not be allowed to use social media because social media companies require users to sign contracts.

“Another way that they’re viewing this is that children don’t have the capacity to enter into a contract,” Soye said.

Edmonson called the contracts question “an evolving area of the law,” and said he couldn’t offer any expertise on it.

Soye said the committee should find constitutional scholars who could address that question at a future meeting. Other group members suggested bringing in Attorney General Jackley to discuss efforts to address tech issues across state lines, some of which involve concerted efforts by state attorneys general, or to create subcommittees to dig into specific aspects of social media, adult content and AI.

GET THE MORNING HEADLINES DELIVERED TO YOUR INBOX SUPPORT NEWS YOU TRUST.

The post ‘Firehose’ of information confronts legislators studying internet use by children and AI appeared first on South Dakota Searchlight.